Diving into the basics of AI

Using artificial intelligence, but not sure you understand it? This primer is for you.

As we wrap up 2023, it’s safe to say this was the year artificial intelligence entered the chat. While AI-powered tech has been around for some time — from voice assistants to personalized shopping recommendations — more recent advancements in neural networks and deep learning have pushed the technology to breakthrough capabilities.

The latest AI mimics human cognitive functions like learning and problem-solving. Complex machine learning systems are trained on vast data to recognize patterns, make predictions, and make decisions. Unlike traditional algorithms, these AI models can independently adapt to different tasks without extensive manual coding by engineers. The newest tools have made it easy and accessible for anyone to apply machine intelligence to their everyday tasks.

As collective enthusiasm for AI grows, corporations and governments are scrambling to understand, regulate, and capitalize on this emerging technology. The general consensus is that AI will best be used — in the near term, anyway — to assist human workers, not replace them (phew).

While many of us are already using AI chatbots and platforms in our daily lives, the technology is evolving faster than most of us can keep up with. At Modus, we’re helping businesses apply AI to boost productivity, stay competitive, and better connect with customers. For those just getting started with AI, now is the time to learn the basics of AI in order to leverage it responsibly in many different ways.

Understanding AI’s evolution

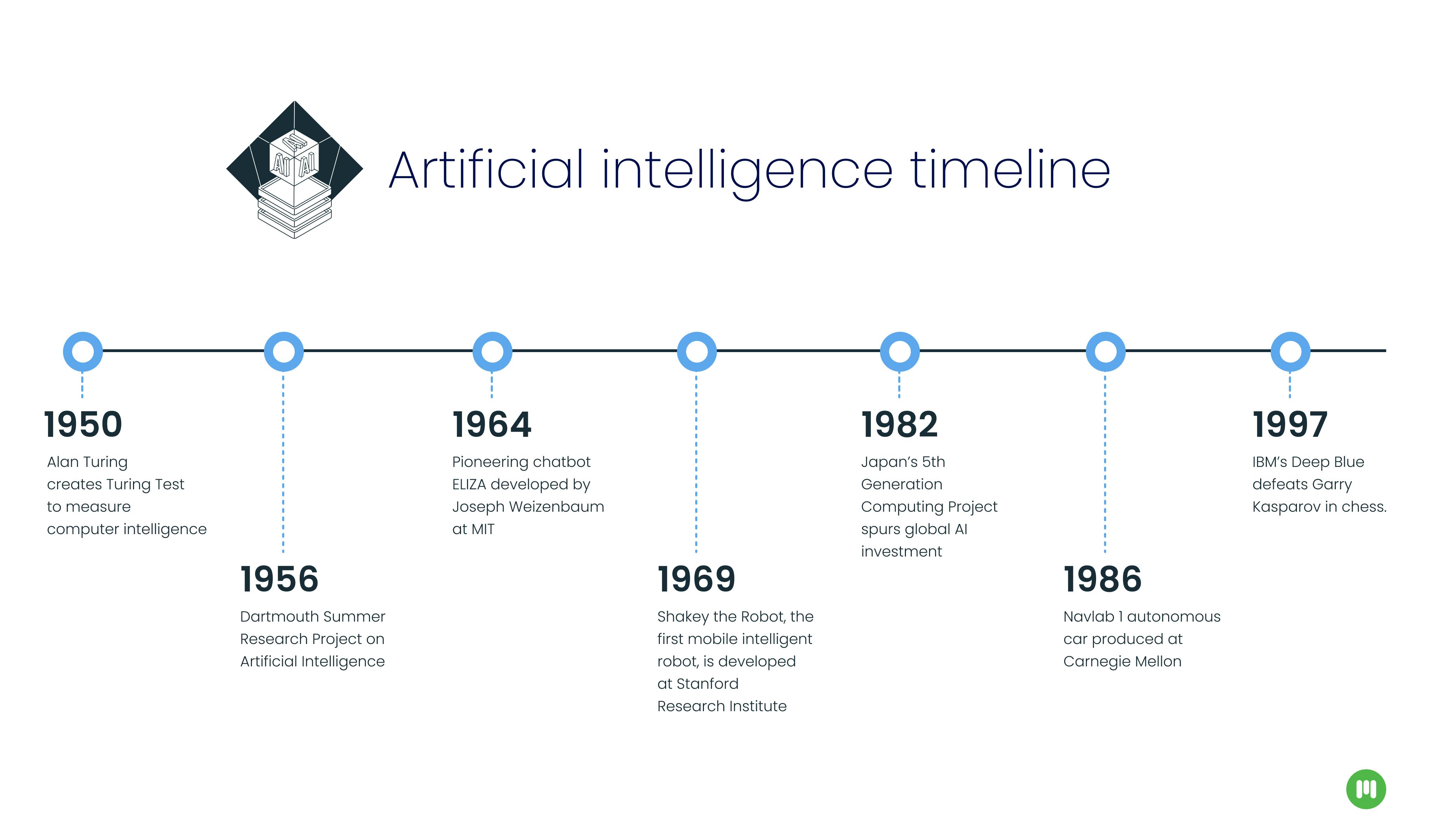

First, let’s break down what AI is and how we got here. Simply put, artificial intelligence is an umbrella term for technologies focused on training computers to simulate human intelligence. It’s generally accepted that the term “artificial intelligence” emerged at a conference at Dartmouth College in 1956, however, the concept of machine intelligence was introduced in 1950 in an essay by Alan Turing. His paper asked the question, “Can machines think?” In what has come to be known as the Turing Test, the computer scientist proposed a way to measure whether a computer could be as “smart” as a human.

In the 2010s, people started asking a different question when it came to developing AI. ... Can we create a statistical model that allows it to categorize things on its own?

Of course, early computers had a long way to go. For example, they could execute commands, but not store them. While Joseph Weizenbaum’s creation of ELIZA, a natural language processing computer program, was seen as a breakthrough in 1965, the decades that followed are known as the “AI Winter” due to setbacks and lack of funding.

Then came the Internet age, ushering in a massive amount of data and fueling the development of machine learning. Machine learning is the process by which AI tools “learn” to recognize patterns and predict outcomes or make decisions using algorithms and neural networks — brain-inspired computing systems.

This period also saw major advances in natural language processing, speech recognition, computer vision, and robotics. A significant milestone occurred in 1997 when IBM's Deep Blue supercomputer defeated world chess champion Garry Kasparov. Even so, in this early wave of AI, the technology was limited to predictive models.

“In the 2010s things started to pick up… people started asking a different question when it came to developing AI,” said Nicholas Lituczy, Associate Creative Director, UX, at Modus. “Instead of creating these deterministic models where we're going to tell it everything, which is also kind of a problem because it's dependent on what you think of the world… we're going to see if the computer can figure that out for itself. Can we create a statistical model that allows it to just categorize things on its own?”

Enter generative AI

Unlike traditional AI models, which assess huge amounts of data to spot patterns and make predictions, generative AI is able to produce original content that mimics human creativity.

The release of OpenAI’s ChatGPT 3.0 at the end of 2022 stunned users with its ability to produce convincing, human-like prose. The chatbot tool reached 100 million users in two months, and competitors like Google’s Bard and Microsoft’s Bing quickly followed. These advanced tools use large language models (LLMs) — trained deep learning models that are designed to understand human languages and generate text in a human-like manner. The processing power of these models is enormous. GPT-3 had 175 billion parameters (values or nodes within its neural network), while GPT-4, released this year, is rumored to have 1 trillion.

The LLMs process text inputs by decoding strings of words, looking for clues about the context, so that it can predict a response. Generative AI visual tools like Midjourney and DALL-E have also blown away users with their impressive artistic capabilities. For example, check out our virtual art gallery from our “Android Dreams” make-a-thon last year.

While fascinating, these tools can’t deliver much more than prompt completion.

“It's just guessing which word goes after the word that came before, or it's guessing which pixels to cluster together,” said Lituczy. “It's really good at acting as a tool but not an artist. … It doesn’t know why or how it's making any of the things it's making.”

Promise and peril

As AI breaks out of its early adopter phase, the technology brings exciting possibilities, but its potential is not without risk. The business world must prioritize responsible, ethical safeguards to prevent unintended, biased, misinformed, and/or malicious outcomes.

This is where human-centered innovation will lead the way. By collaborating with technologists, researchers, business leaders, and policymakers, we can achieve the vision of AI to improve lives and solve some of the world’s biggest problems.

Stay tuned for our next installment on AI, where we will dive into the best use cases for this groundbreaking technology.